- #AIRFLOW 2.0 TASK GROUPS MANUAL#

- #AIRFLOW 2.0 TASK GROUPS UPGRADE#

- #AIRFLOW 2.0 TASK GROUPS CODE#

- #AIRFLOW 2.0 TASK GROUPS OFFLINE#

Using the TaskFlow API with complex/conflicting Python dependencies.New _BranchPythonDecoratedOperator added a decorator for BranchPythonOperator which wraps a Python callable and captures args/kwargs when called for execution.Options -from-revision and -from-version may only be used in conjunction with the -show-sql-only option, because when actually running migrations we should always downgrade from the current revision. If you want to preview the commands but not execute them, use option -show-sql-only. Alternatively, you may downgrade/upgrade to a specific Alembic revision ID.

#AIRFLOW 2.0 TASK GROUPS UPGRADE#

You can also run it against your database manually or just view the SQL queries, which would be run by the downgrade/upgrade command.Īirflow db downgrade downgrades the database to your chosen version and airflow db upgrade upgrades your database with the schema changes in the Airflow version you’re upgrading to. The downgrade/upgrade SQL scripts can also be generated for your database.

#AIRFLOW 2.0 TASK GROUPS OFFLINE#

Airflow DB downgrade and Offline generation of SQL scriptsĪ new command in Airflow 2.3.0 is used for your chosen version by downgrading or upgrading the database. JSON serialization can also be used when setting connections in the environment variables. The Airflow URI format can be very tricky to work with and although we have for some time had a convenience method with Connection.get_uri, using JSON is just simpler. With this change we can serialize as JSON. Previously in general we could only store connections in the Airflow URI format.

You can now create connections using the JSON serialization format.

#AIRFLOW 2.0 TASK GROUPS MANUAL#

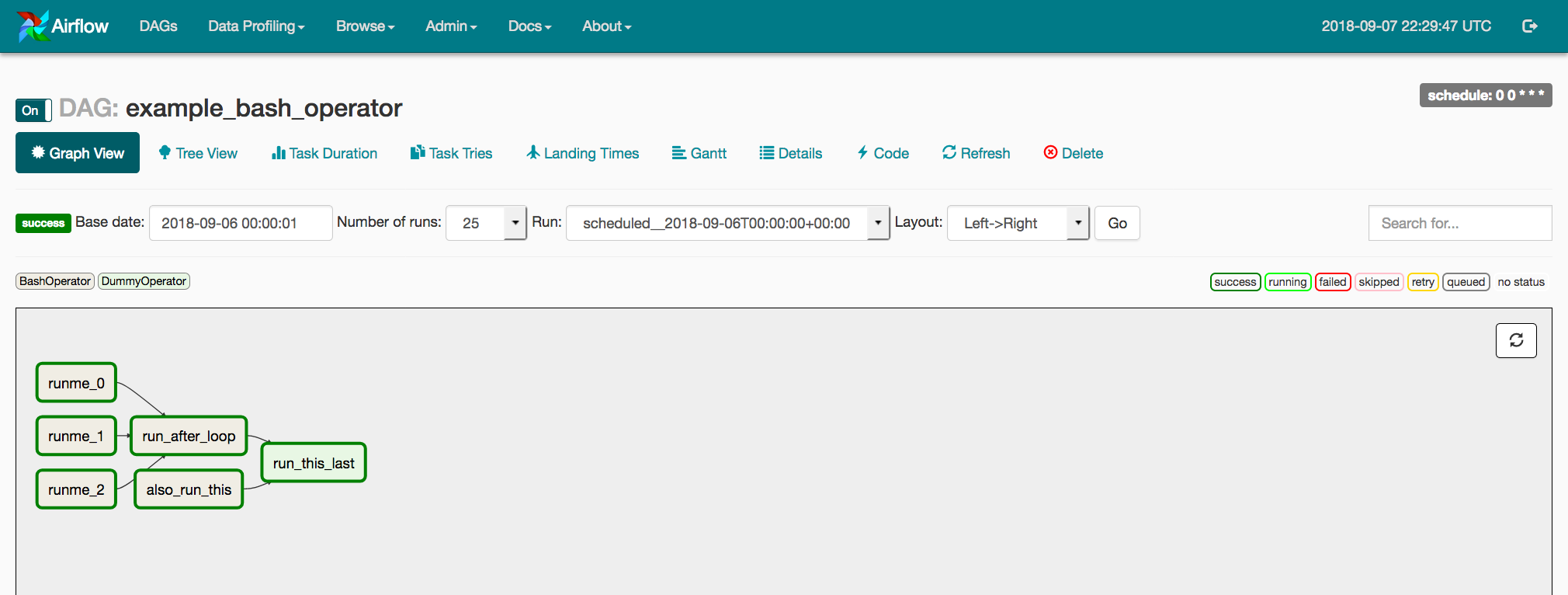

additional “play” icon for manual triggers and.quick performance checks, as it shows duration and other information on the same view,.leave dependency lines and structure of the DAG to Graph View,.Also, we can directly know the DAG duration to finish all task instances, which allows us to know the average performance of the DAG. For example, the grid view can quickly filter tasks upstream, so we can easily monitor the DAG. Grid View makes the DAG display cleaner and provides more useful information than the previous tree view. The Grid View replaces the Tree View, with a focus on task history. A separate parallel task is created for each input. expand(): This function passes the parameter or parameters that you want to map on.partial(): parameters that remain constant for all tasks.Error handling is applicable to the entire set of tasks so if any one of the dynamic tasks fail, Airflow marks the whole set as failed.ĭynamic Task Mapping is simple to implement with two new functions:.An UnmappableXComTypePushed exception is thrown at runtime with any other data type. We can only expand input parameters or XCOM results, which are lists or dictionaries.

#AIRFLOW 2.0 TASK GROUPS CODE#

Dynamic Mapping is able to have a task generate the list to iterate over. The scheduler will make copies of the mapped job, one for each input, just before it is executed. This is similar to defining tasks in a loop.Ī scheduler can carry out this action depending on the results of the previous task rather than having the DAG file collect the data and execute it by itself. This new feature adds the possibility of creating tasks dynamically at runtime.

0 kommentar(er)

0 kommentar(er)